Vibe Coding an App

I’ve built an app from zero to production through Vibe Coding. In this article, I will describe my experience and I’ll share some tips and tricks (first tip: get in to software engineering, you will need it).

Introduction

Vibe Coding.

The new buzzword for your next PowerPoint presentation. Not that new anymore, but definitely still a buzzword.

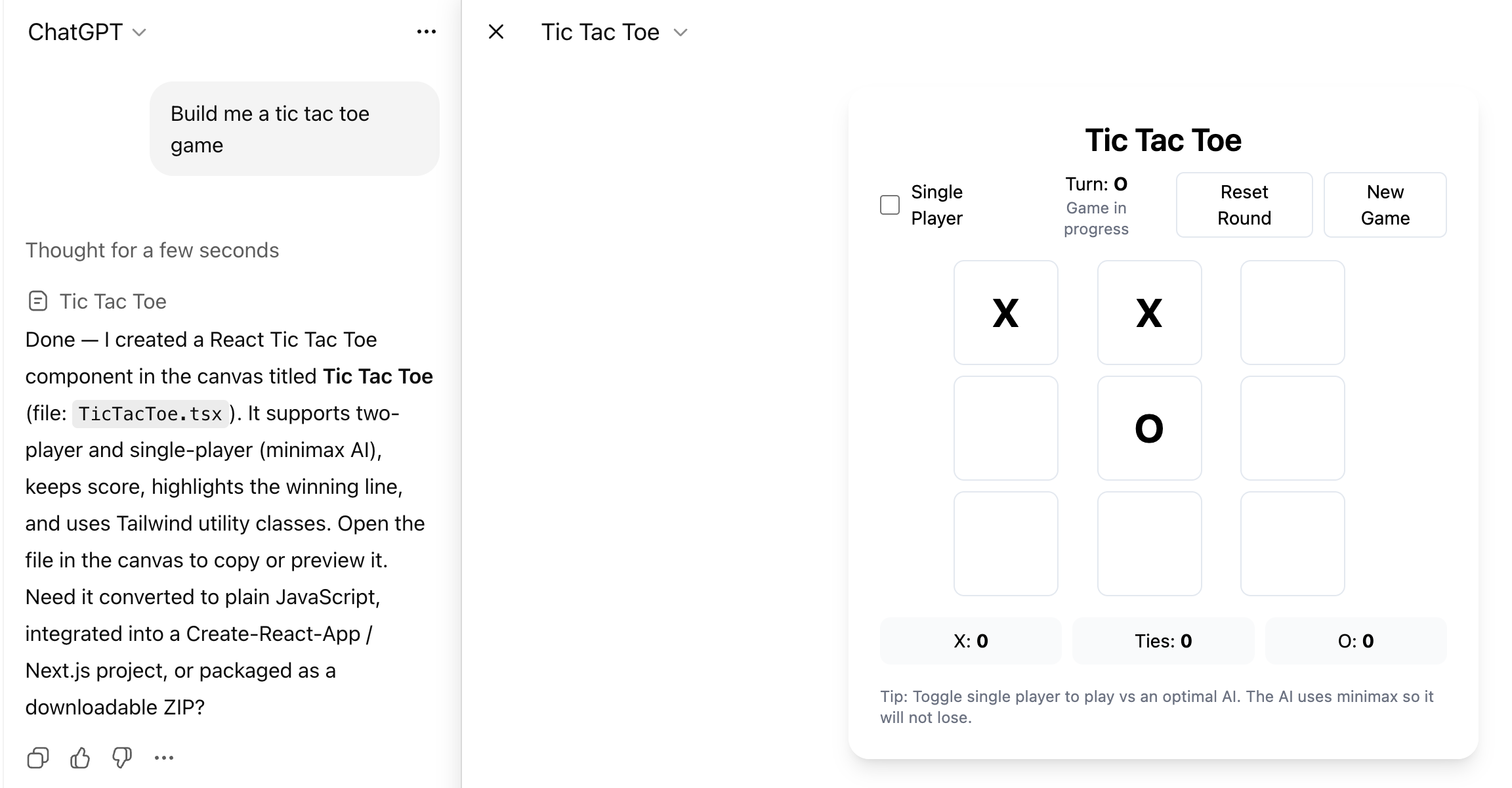

What is Vibe Coding? Simply put, instead of asking your development team to build something, you ask an AI Agent. As an example, when asking ChatGPT to “build a tic tac toe game”, in a minute or less, you have a working game:

This is amazing, and in theory, this opens up for a potential exponential boost to the productivity of software developers - maybe even replacing them altogether.

A few months back, when the term saw first daylight, the majority of vibe coding posts on LinkedIn seemed to describe how easily non-technical people could build and ship software, creating million-dollar products. These days, however, the majority of posts seems to be about people searching for examples of million-dollar products built through Vibe Coding - where did all these successful vibe-coded projects and products end up?

TLDR; Conclusion

After spending 6 months Vibe Coding, I can safely conclude that Vibe Coding per its definition does not work. Vibe Coding a prototype, or something you will quickly throw away will work, but do not fool yourself (or your product manager) to believe that this will ever make it to production.

However, coding with an AI Agent in the driver seat (aka. AI Coding, Agentic Development) is very, very useful. When done carefully and with skill, this will increase your productivity, sometimes “only” 2x other times by 100x.

These are my observations and my current belief:

Can you vibe code software from zero to production?

Absolutely not

Will software engineers be replaced by AI Agents any time soon?

Certainly not

Do AI agents improve your productivity?

Definitely!

How to benefit from Vibe / AI Coding

The following are my recommendations, if you want to use Vibe Coding (ish) for a real-world product in Q3 2025. Nothing you find here is groundbreaking, but everything is based on my own experience (which you will find further down).

1. Don’t Vibe Code

Depending on the size of the product, Vibe Coding is not a good decision. I tried to Vibe Code a React Native app, a framework written in a language the LLM models should be well-versed in; it should be perfect for Vibe Coding.

And it definitely worked magic sometimes. But the end product? Not shippable in any way. Mainly due to performance issues, which stem from very bad spaghetti code.

If you stay true to the definition of Vibe Coding at Wikipedia:

“A key part of the definition of vibe coding is that the user accepts code without full understanding.”

And:

“If an LLM wrote every line of your code, but you’ve reviewed, tested, and understood it all, that’s not vibe coding in my book—that’s using an LLM as a typing assistant.”

then it’s not possible to Vibe Code anything that targets a production environment. Multiple times during development I had to step in and either handhold the agent by pointing to the exact code to optimize, or simply do the necessary refactor myself.

2. Instead, AI Code

To quote Warp (which I’m getting more and more fond of), prompt, review, edit and ship.

Simply put, you should treat the AI Agent as a very talented and very capable junior developer. And what do we do with junior developers? We read their PRs very thoroughly. We test their code very extensively.

So this is not Vibe Coding per definition, but it will get you much further, much faster. Do this:

- Ask the Agent to implement something (the more details, the better), but ask it to plan first

- Read, understand and agree to this plan

- Don’t bother to accept every change it makes while working - just let it do its magic

- When the Agent is finished, perform a Code Review

- Test it

- Approve it

- Git add and commit it (easy rollback)

This workflow will get you far, but if you enjoy coding, and enjoy the dopamine hit you get when something is working, you will probably find this less satisfying.

3. Write tests

Or have the AI write tests. Never before has writing automated tests been easier, and never before has it been as important.

Having a decent test suite will save your ass, because at some point, the Agent will go rogue.

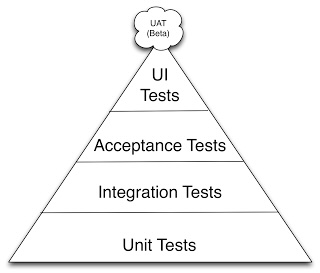

I don’t think there’s much groundbreaking here, and if you already have good test habits, there’s nothing new to pick up really. The good ol’ triangle still holds true.

4. Add context to your model

Providing the model with relevant information will greatly improve both the quality and the speed (less back and forth) of the agent.

These days, there are many possibilities:

-

Cursor / Warp files

This will tell your model what to do and what not to do. The Agent does tend to forget these rules from time to time, so if it feels like the rules are being disregarded, re-run the prompt reminding it about them. -

External Documentation

Point your LLM to some external documentation, i.e. Swagger documentation for endpoints. This will make your life much easier. In Cursor and Warp there is a dedicated section for this in the settings. -

In-repository Documentation

A trick that Tom Blomfield shared on LinkedIn. Keep documentation inline in your repository, that will ensure the Agent will be aware of it. -

MCP Servers

The new black, though it seems to have faded slightly the past months. Still very useful.

Example from my experience

In the beginning, the Agent didn’t know about my API, so I had to explain it every time. When I made the API documentation available for the Agent, it removed the need for me to provide these details, and it was able to better guess my intentions (which APIs to call when). A very small change that made my life easier.

5. Experiment with new models and tools

As software developers we are accustomed to having that one IDE we swear by. This, you will have to let go.

These past 6 months I’ve been through Cursor, Windsurf, back to Cursor, onto Claude Code, back to Cursor and currently on to Warp.

You will also want to experiment with new models. In my experience, upgrading to new models, or using a paid model instead of the free ones, rarely has a huge impact. Sometimes you will find the Agent stuck and switching models might help. Unfortunately in my experience, it often didn’t help, and I would eventually have to turn to debugging myself.

That’s it folks!

If you only came for my tips and tricks, you are free to leave. Thanks for reading :-)

Why I turned to Vibe Coding

I had a unique and brilliant idea for a new app for the biking community. I’m an enthusiastic road bike rider myself, so this seemed like the perfect idea to combine two great interests of mine.

Being an experienced web developer, I had no problem putting down the backend - this was quickly done with FastAPI, a Python framework - no vibe coding, and a minimal use of AI.

But with zero experience in Swift, and my Java experience dating back to my school days, the actual App itself would be a bigger challenge. I consider myself a solid React developer, and I do have some React Native experience, however this also dates a few years back, so I would definitely need to pick up some pieces.

With the ambition to move fast and get this app shipped asap, Vibe Coding seemed like a perfect fit. With Vibe Coding I could add a new resource to the team (the AI agent), who knew everything about React Native, and this resource could be in charge of building the entire frontend app. Neat, right!

The tech stack was dead simple: React Native for building the user interface and Maestro for E2E testing. As for AI models, I went through Claude Sonnet 3.7, 4 and lately 4.1. I also used GPT 4 and more recently GPT 5, when Claude didn’t suffice. I used both Cursor, Windsurf, Claude Code and more recently Warp.

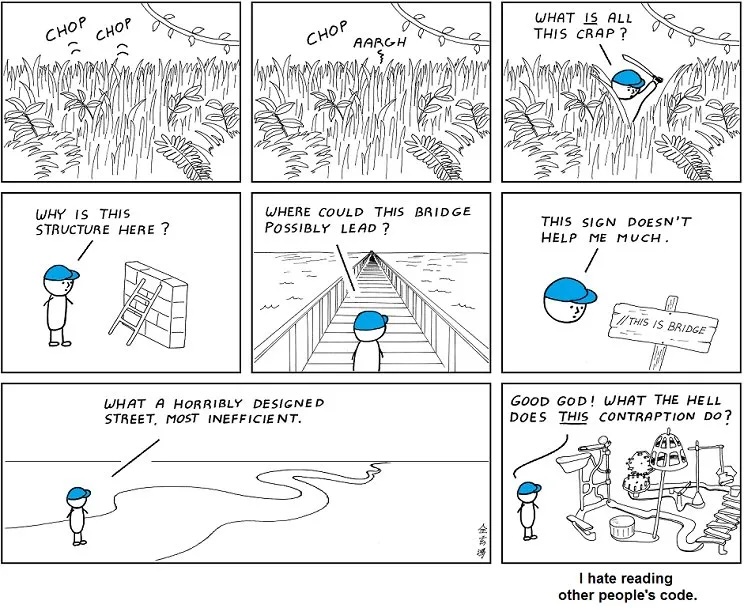

My experience Vibe Coding

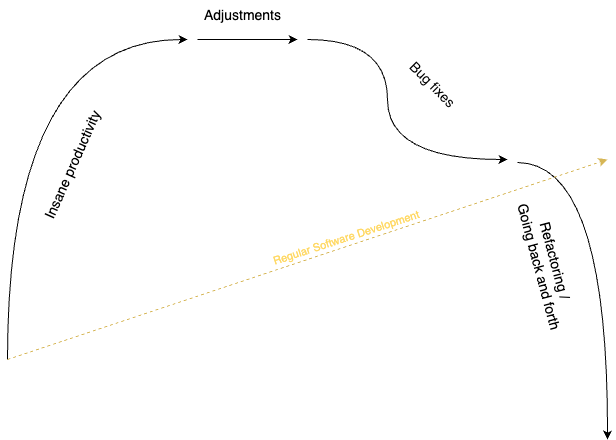

This image sums up my experience with Vibe Coding. It’s by no means scientific, so take it with a grain of salt:

From zero to hero

This is where Vibe Coding works - at least in the beginning. I received an insane amount of productivity, and I felt truly empowered. That feeling is quite nice! Sometimes it’s 2x, other times it’s 100x.

It’s pretty straightforward: write a prompt, maybe share an image of the design, and after working for a minute or two, boom! - the functionality is ready to go.

The accuracy is typically around 70-80%, which is not bad, considering it did something I would probably have spent an hour or two doing, and it did that in just a minute or two.

Adjustments

An accuracy of 70%-80% is nice, but it will not cut it for production. So the next task is to make sure the design and behavior look and feel like they’re supposed to.

This typically happens through multiple smaller prompts, something like:

- Make the space between the sections larger - look at the image

- That was too much, make it a little smaller

- Adjust that space to 32px (after manually checking the distance in Figma)

- When you click the button, navigate to X scene

And to quote the author of Vibe Coding, Andrej Karpathy, it mostly works.

The biggest drawback of this phase, is that I quickly become bored of waiting for the Agent to implement small tweaks. The waiting time is too short to do something else, but long enough to start feeling bored. My bet is this will improve with newer models, although I experienced no noticeable change when switching to new or more powerful models.

The Incompetent Agent

Unfortunately it sometimes happens, that the Agent simply will not understand what I’m trying to do, or fail to implement the necessary changes. In normal AI fashion it convincingly tells me that it has successfully completed the task:

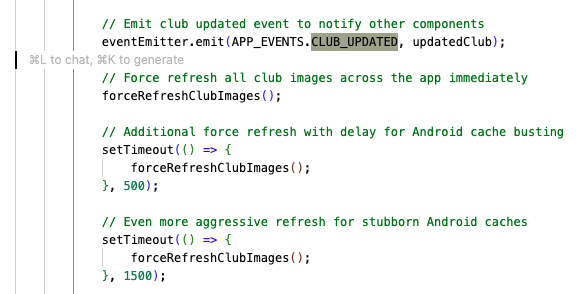

In Social Rider, it is possible to upload an image for a club. It would be nice, and expected, if this image updated throughout the app, everywhere the image was in use. This turned out too difficult for the AI Agent to implement.

After multiple prompts (i.e.: new images are still not updated, ensure they are up-to-date throughout the app wherever they are rendered), the Agent more or less just kept retrying the same approach.

This example is pretty trivial and easy to understand, but that’s more like the exception to the rule - more times you would open up Pandora’s box and find some very convoluted yet verbose code - in other words, you would find a mess!

It also favors replication, and unless specifically prompted to, will often just recreate an entire function instead of bothering to extract the functionality and make it reusable from multiple places.

Maybe this is not a problem in the long run? If the AI Agent is the only one to ever touch the code, it doesn’t really matter whether it needs to change something one place, or a 100 places. But until we get to a place where true Vibe Coding seems feasible, it does in fact matter quite a lot (at some point, it will become your burden to maintain)

The Rogue Agent

I also experienced multiple times the Agent going rogue. While testing some feature I was currently working on, I would discover that something entirely unrelated, had changed.

Take this message dialog as an example. I had this nice looking one implemented and working:

Suddenly, this message dialog had changed to this look:

I don’t think we should compare AI Agents to humans too much, since after all they are a tool to use, but I don’t believe many humans would go and implement a change like that!

The Failure of Vibe Coding

So my workflow with Vibe Coding pretty much consisted of the following steps, over and over:

- Make the Agent implement something

- Make the Agent adjust it to fit the specification

- After too many prompts (how deep is the rabbit hole), realize that the Agent is be unable implement something

- Open up Pandora’s box and discover the mess it made

- Clean up the mess

Step 4 and 5 would only happen IF step 3 happened, and this was not every time. Most of the times it worked well. But the major problem with Vibe Coding is that when you need to go to step 4 and 5, which will happen at some point, you have to comprehend a lot of the code from the previous features that the Agent successfully implemented.

And this is exactly why Vibe Coding doesn’t work, and you should turn towards an Agentic Development cycle instead.

All the time you gained Vibe Coding features, will suddenly be lost in you trying to hack your way through the Agent’s code.

This turned out okay for me, because I understand React Native and am able to debug and refactor myself. Had I decided to go with Swift and Java, I wouldn’t have been able to ship the end product - I’m 100% sure I would have reached a dead end, and then I would have had to make a tough decision - go learn Swift, or rewrite in React Native? I’m too old to learn something new, so that would result in a major rewrite.

The Result

I managed to build an app through Vibe Coding, although it often turned out to be more AI Coding than actual Vibe Coding.

There’s definitely a huge benefit of Vibe Coding when building prototypes (i.e. using Loveable), which is something we do and benefit of every day at CyberPilot. This is useful for many reasons i.e. when quickly wanting to try out some idea or when handing over specifications to developers.

Did I save time due to Vibe Coding? Certainly, but only because I had the skill to take the driver seat when the Agent failed to untangle its own messy code. Had I not been able to that, I might have ended up like Carla Rover in AI Vibe coding has turned senior devs into ‘AI babysitters,’ but they say it’s worth it.

That’s it folks! I don’t have much else to say about Vibe Coding, and if you made it this far, then thanks for reading.

And if no one ever makes it this far, at least I had a lot of fun writing it :)